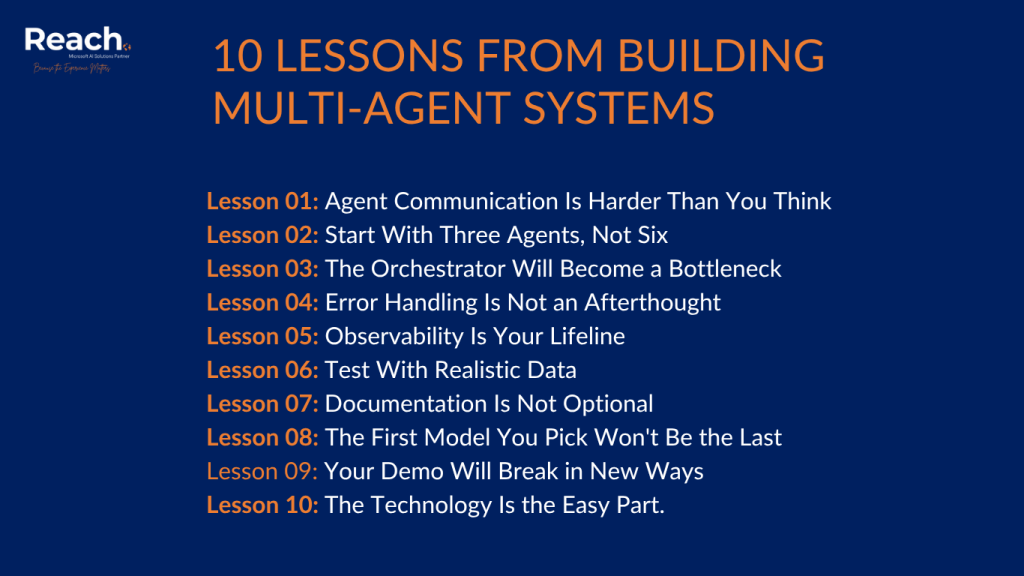

Building our truck brokering multi-agent system for Community Summit NA was equal parts exhilarating and humbling. On paper, the architecture was elegant. In practice? Well, let’s just say reality has a way of teaching you things no documentation ever will.

This post isn’t about theory or best practices you can find in whitepapers. This is about the real stuff—the mistakes we made, the surprises we encountered, and the hard-earned wisdom that only comes from actually building, breaking, and fixing multi-agent systems in the wild.

If you’re about to embark on your own multi-agent journey, grab a coffee and learn from our scars.

Lesson 1: Agent Communication Is Harder Than You Think

What we expected: Agents would pass clean, structured data between themselves. JSON in, JSON out. Simple.

What actually happened: Agent communication became our biggest source of bugs and unexpected behavior.

The problem wasn’t the technical mechanism—APIs and message queues work fine. The problem was semantic. When the Load Analysis agent says a shipment is “urgent,” what does that actually mean to the Matching agent? Is that a priority score? A boolean flag? A time constraint?

We spent days debugging why our Match Scoring agent was making seemingly random decisions, only to discover it was interpreting the Load Analysis agent’s output completely differently than intended.

The fix: We created explicit data contracts between agents. Not just “here’s a JSON schema,” but documented semantic meanings. We added validation layers that caught mismatched expectations early. And we built extensive logging to trace exactly how data transformed as it moved through the agent pipeline.

The takeaway: Invest heavily in defining clear communication contracts upfront. Your future debugging self will thank you.

Lesson 2: Start With Three Agents, Not Six

What we did: Designed all six agents from the start—Intake, Load Analysis, Matching, Geographic Optimization, Match Scoring, Route Analysis.

What we should have done: Started with three core agents and added the others incrementally.

When everything is new—the orchestration pattern, the communication protocols, the error handling—debugging six agents simultaneously is overwhelming. We’d see a bad result and have to trace through six different potential failure points.

Around week two, we temporarily disabled three agents and focused on getting the core workflow (Intake → Load Analysis → Matching) working perfectly. Once that foundation was solid, adding the other agents was straightforward.

The takeaway: Build your multi-agent system incrementally. Get your core agents rock-solid before expanding. It’s slower at first but faster overall.

Lesson 3: The Orchestrator Will Become a Bottleneck (Until It Doesn’t)

The problem: Our centralized orchestrator was handling every piece of communication between agents. As we added more agents and tested with higher volumes, the orchestrator became a performance bottleneck.

The revelation: Not all communication needs orchestration.

We realized our Route Analysis agent needed real-time updates from Geographic Optimization, but these updates didn’t need to flow through the orchestrator. The orchestrator didn’t need to know about every location ping or traffic update.

We introduced direct agent-to-agent communication for specific real-time data streams while keeping strategic coordination centralized. This hybrid approach gave us the performance we needed without sacrificing orchestration benefits.

The takeaway: Start centralized for simplicity, but be ready to add decentralized elements for performance-critical paths. Don’t be dogmatic about patterns—adapt to your actual needs.

Lesson 4: Error Handling Is Not an Afterthought

What happened: Three days before the presentation, our system started randomly failing mid-workflow. Sometimes it would work perfectly. Sometimes it would crash at the Matching stage. We couldn’t reproduce it consistently.

The culprit? We hadn’t properly thought through error handling and retry logic.

When the Geographic Optimization agent occasionally timed out (calling an external routing API), the orchestrator didn’t know what to do. Should it retry? Fail the entire workflow? Use cached data? We hadn’t decided, so it did… nothing. The workflow just hung.

The fix: We implemented a proper error handling strategy:

- Transient errors (API timeouts, rate limits): Automatic retry with exponential backoff

- Data errors (invalid formats, missing fields): Fail fast with clear error messages

- Agent failures (crashes, unhandled exceptions): Graceful degradation or workflow termination

- Circuit breakers: Stop calling failing services temporarily

We also added health checks for each agent and built a monitoring dashboard that showed which agents were healthy, which were struggling, and where workflows were getting stuck.

The takeaway: Design your error handling strategy before you need it. Multi-agent systems have multiple failure points—you need a plan for each.

Lesson 5: Observability Is Your Lifeline

The reality: When you have six agents making decisions, tracing why the system did something is surprisingly hard.

We’d get a final result—”Truck 47 assigned to Load 203 with a profitability score of 0.73″—and someone would ask, “Why not Truck 51?” Good question. Let us trace through six agents’ decision-making processes and get back to you in an hour.

The solution: We built comprehensive observability from day one (well, after we learned this lesson the hard way):

- Correlation IDs: Every workflow got a unique ID that flowed through all agents

- Decision logging: Each agent logged not just what it decided, but why

- Trace visualization: We built a simple UI showing the complete workflow path

- Performance metrics: How long each agent took, where bottlenecks occurred

This wasn’t just for debugging. During the demo, being able to show the complete decision-making trace added tremendous value. People could see the “why” behind the system’s choices.

The takeaway: Observability isn’t optional. Build it in from the start. You’ll need it for debugging, optimization, and explaining your system to stakeholders.

Lesson 6: Test With Realistic Data (Or Suffer the Consequences)

Our mistake: Early testing used clean, simple data. “Truck A at Location X needs to go to Location Y.” Everything worked beautifully.

Then we tested with real-world data—trucks with maintenance schedules, loads with special handling requirements, routes with toll roads and weight restrictions. The system fell apart.

The Load Analysis agent couldn’t handle commodity classifications it hadn’t seen. The Geographic Optimization agent didn’t account for truck height restrictions. The Matching agent made legally impossible pairings (refrigerated loads assigned to dry van trucks).

The fix: We generated a comprehensive test dataset that included:

- Edge cases and unusual scenarios

- Real-world constraints and restrictions

- Data quality issues (missing fields, inconsistent formats)

- High-volume stress tests

Testing with realistic data exposed problems we never would have found with clean test cases.

The takeaway: Your multi-agent system will only be as robust as your test data. Invest time in creating comprehensive, realistic test scenarios.

Lesson 7: Documentation Is Not Optional

The trap we fell into: We were moving fast, iterating constantly. Documentation felt like it would slow us down.

Then Boki needed to modify an agent while I worked on the orchestrator. He asked, “What format does the Matching agent expect?” I said, “Uh, let me look at the code…”

We wasted hours re-explaining decisions, rediscovering why we did things certain ways, and reverse-engineering our own system.

What we learned: In multi-agent systems, clear documentation isn’t just helpful—it’s essential. When multiple agents (and multiple developers) need to work together, shared understanding is critical.

We created:

- Agent specifications: What each agent does, expects, and returns

- Communication contracts: Data formats and semantic meanings

- Architecture diagrams: How agents relate and communicate

- Decision logs: Why we made specific design choices

This documentation wasn’t just for us. It became crucial for our presentation, helping the audience understand the system quickly.

The takeaway: Document as you build, not after. Your documentation should be as modular as your agents—each agent gets its own spec.

Lesson 8: The First Model You Pick Won’t Be the Last

What happened: We started with a specific Claude model for all agents. It worked well for most agents but was overkill for simple agents and underpowered for complex reasoning tasks.

The optimization: We ended up using different model configurations for different agents:

- Simple validation tasks: Lighter, faster models

- Complex reasoning and decision-making: More powerful models

- Real-time operations: Models optimized for latency

This wasn’t premature optimization—it was necessary optimization. Our Match Scoring agent needed sophisticated reasoning. Our Intake agent needed fast validation. One-size-fits-all didn’t work.

The takeaway: Design your system to support per-agent model configuration from day one. You’ll want this flexibility as you optimize.

Lesson 9: Your Demo Will Break in New and Creative Ways

Murphy’s Law of Multi-Agent Systems: No matter how much you test, the live demo will find a new way to surprise you.

During our presentation prep, we ran the demo hundreds of times successfully. Then, during the actual presentation, we hit an edge case we’d never encountered. One agent returned data in a slightly different format, and the downstream agents didn’t know how to handle it.

Thankfully, our error handling caught it, and we could show the system gracefully failing and recovering. It actually made for a better demo—showing real-world resilience rather than a perfect, unrealistic scenario.

The takeaway: Build in graceful degradation and recovery. Your system will fail—make those failures informative and recoverable rather than catastrophic.

Lesson 10: The Technology Is the Easy Part

The biggest surprise: Building the multi-agent system was challenging but straightforward. The hard part was everything else.

Getting stakeholder buy-in. Explaining how the system works to non-technical audiences. Managing expectations about what agents can and can’t do. Addressing concerns about AI decision-making. Thinking through governance and oversight.

The technology works. The organizational and human challenges are what determine success or failure.

The takeaway: Plan for the human side of multi-agent systems as carefully as you plan the technical architecture. Change management, communication, and governance are as critical as your code.

What We’d Do Differently

If we were starting over tomorrow, here’s what we’d change:

- Start smaller: Three agents maximum for the initial build

- Observability first: Build logging and tracing before complex logic

- Document earlier: Write the spec before writing the code

- Test realistically: Generate comprehensive test data on day one

- Plan for errors: Design error handling as part of the architecture, not as an afterthought

- Involve stakeholders early: Show working prototypes weekly, not just at the end

The Bottom Line

Building multi-agent systems is incredibly rewarding. When it works—when you see multiple specialized agents collaborating intelligently to solve complex problems—it feels like magic.

But getting there requires embracing complexity, planning for failure, and learning from mistakes. The lessons in this post cost us late nights, debugging sessions, and more than a few “what were we thinking?” moments.

Our hope is that by sharing these lessons, your journey will be smoother than ours. You’ll still make mistakes—that’s unavoidable—but maybe you’ll make different ones and learn new lessons to share with the next person.

Multi-agent systems are the future of intelligent applications. They’re also ready for production today. The question is: are you ready to learn, adapt, and build them?

Thanks for following along with this series:

- Part 1: Why Multi-Agent Systems? The Truck Brokering Use Case

- Part 2: Introduction to Orchestration Patterns in Multi-Agent Systems

- Part 3: Platform Wars: Copilot Studio vs Azure AI Foundry

- Part 4: Lessons from the Trenches: What We Learned Building Multi-Agent Systems

Stay tuned for our deep-dive mini-series on orchestration patterns, where we’ll explore centralized, decentralized, hierarchical, and hybrid patterns in detail.